The $7.2 Million S3 Bucket Mistake

In September 2024, a Fortune 500 financial services company discovered that a misconfigured S3 bucket had been exposing 100 million customer records for 18 months. The breach included SSNs, credit scores, and financial histories.

in total damages including regulatory fines, legal settlements, forensic investigation, customer notification, credit monitoring services, and lost business.

The root cause? A single S3 bucket with public read access enabled and no encryption. The bucket was discovered by security researchers who found it indexed by search engines.

How the Breach Unfolded

March 2023: Initial Misconfiguration

Developer accidentally enables public access while testing data export feature. Bucket policy allows public reads.

March 2023 - Sept 2024: Silent Exposure

100M customer records accessible to anyone with the bucket URL. No monitoring alerts configured for public access changes.

September 2024: Discovery

Security researcher finds bucket via automated scanning. Data includes full PII, financial records, and credit information.

October 2024 - Present: Aftermath

Class action lawsuits, regulatory investigations, $7.2M in direct costs, ongoing reputation damage, and customer churn.

Want Our Complete AWS Security Checklist?

S3 security is critical, but it's just one of 20 essential security configurations. Get our comprehensive checklist that covers all critical AWS security settings, including advanced S3 configurations for compliance frameworks.

Why S3 Misconfigurations Are So Dangerous for SMBs

Amazon S3 buckets store some of the world's most sensitive data, yet they're also the source of the majority of cloud data breaches. For SMBs, a single S3 misconfiguration can be catastrophic.

The Four Critical Business Risks

S3 breaches often trigger severe regulatory penalties:

- GDPR: Up to 4% of annual revenue or €20M (whichever is higher)

- CCPA: Up to $7,500 per affected California resident

- HIPAA: $50,000 to $1.9M per incident, plus potential criminal charges

- PCI DSS: $50,000 to $90,000 per month until compliance is restored

SMB Reality: A 50-person company with 10,000 customer records could face $300,000+ in GDPR fines alone.

S3 breaches create immediate operational crises:

- Mandatory data breach notifications to customers

- Credit monitoring services ($15-30 per affected customer)

- Emergency incident response and forensics ($200-500/hour)

- Customer churn (average 35% for SMBs after data breaches)

Data breaches often trigger expensive legal proceedings:

- Class action lawsuits from affected customers

- Legal defense costs ($500-1,000/hour for data breach attorneys)

- Settlement amounts (often $50-200 per affected record)

- D&O insurance claims and premium increases

Long-term business impact often exceeds immediate costs:

- Loss of competitive advantage and intellectual property

- Inability to win enterprise contracts requiring security certifications

- Increased insurance premiums and security audit requirements

- Talent acquisition challenges due to reputation damage

True Cost of S3 Data Breach for SMBs

The 5 Most Common S3 Security Threats

Understanding these threats helps you prioritize your security efforts and understand why each configuration step matters.

Public Access Misconfigurations

95% of S3 breaches start here. Accidental public read/write permissions expose entire buckets to the internet. Often caused by overly permissive policies or unclear AWS console settings.

Unencrypted Data at Rest

78% of exposed S3 data is unencrypted. When buckets are compromised, unencrypted data provides immediate value to attackers without additional decryption efforts.

Overprivileged IAM Access

Insider threats and credential compromise are amplified by excessive S3 permissions. Users with unnecessary admin access can accidentally or maliciously expose data.

Missing Access Logging

Blind spot attacks exploit the lack of access monitoring. Without logging, organizations can't detect unauthorized access or investigate the scope of breaches.

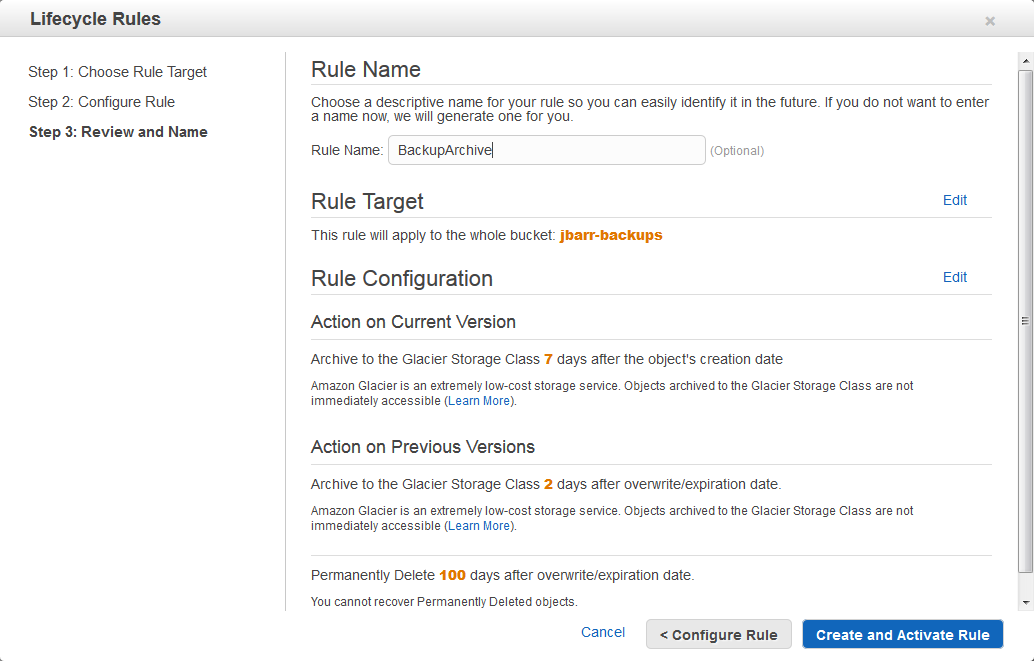

Inadequate Lifecycle Management

Data proliferation and compliance violations occur when sensitive data persists longer than necessary, expanding the attack surface and regulatory exposure.

The first and most critical step is ensuring your S3 buckets cannot be accessed publicly. This single configuration prevents 95% of S3 data breaches.

Prerequisites:

- AWS account with S3 administrative privileges

- List of all S3 buckets in your account

- Understanding of which buckets (if any) legitimately need public access

Console Steps:

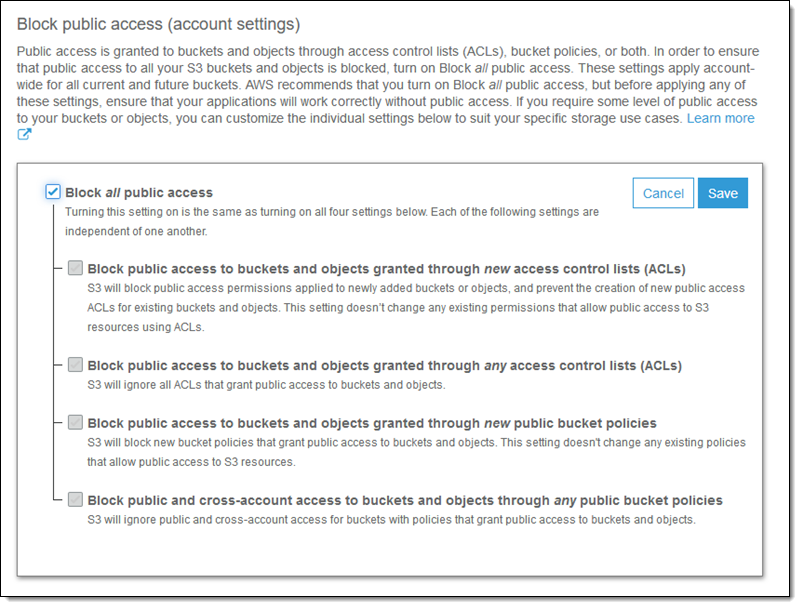

1.1 Account-Level Public Access Block

- Sign in to AWS Console and navigate to S3

- In the left sidebar, click "Block Public Access settings for this account"

- Click "Edit" on the account-level settings

- Check ALL four options:

- Block public access to buckets and objects granted through new ACLs

- Block public access to buckets and objects granted through any ACLs

- Block public access to buckets and objects granted through new public bucket or access point policies

- Block public and cross-account access to buckets and objects through any public bucket or access point policies

- Click "Save changes"

- Type "confirm" to acknowledge the change

Screenshot: S3 Account-level Block Public Access settings

All four checkboxes should be enabled for maximum security

All four checkboxes should be enabled for maximum security

1.2 Verify Individual Bucket Settings

- Return to S3 bucket list

- For each bucket, click the bucket name

- Go to "Permissions" tab

- Under "Block public access", verify all settings show "On"

- If any show "Off", click "Edit" and enable all options

1.3 Remove Existing Public ACLs and Policies

- For each bucket, check the "Access Control List (ACL)" section

- Remove any permissions for "Everyone (public access)" or "Authenticated Users group"

- In "Bucket policy" section, delete any policies containing

"Principal": "*" - Save all changes

1.4 Handle Legitimate Public Access Needs

If you need to serve public content, use these secure alternatives:

- Static websites: Use CloudFront with Origin Access Identity (OAI)

- Public downloads: Generate presigned URLs with expiration

- CDN content: CloudFront with proper security headers

- API responses: API Gateway with proper authentication

Encryption protects your data even if bucket access controls fail. It's required by most compliance frameworks and adds virtually no cost or complexity.

Console Steps:

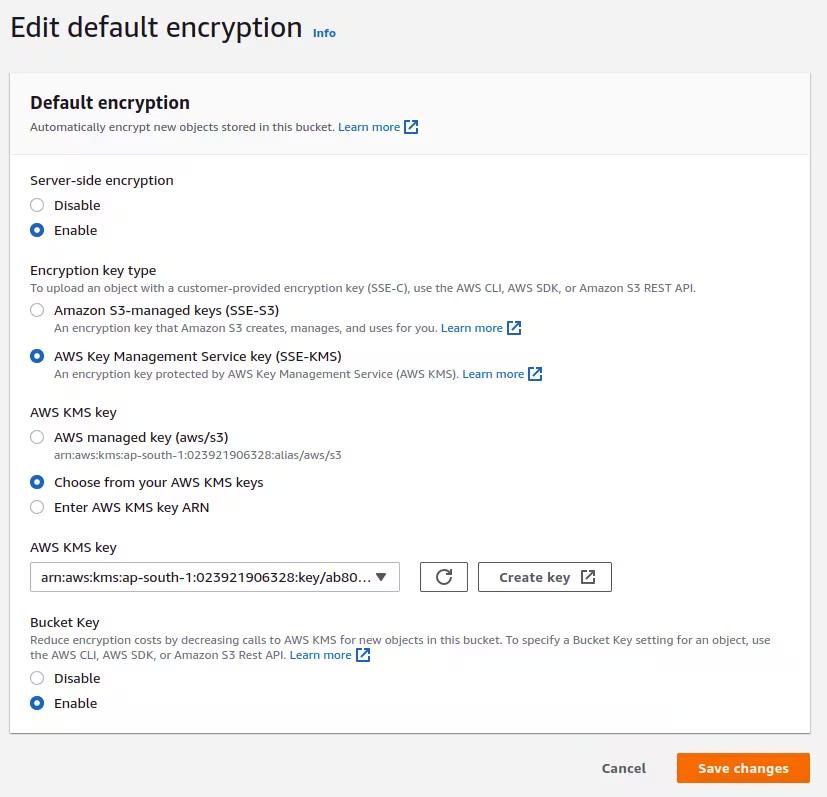

2.1 Enable Default Encryption

- For each S3 bucket, go to "Properties" tab

- Find "Default encryption" section and click "Edit"

- Select "Server-side encryption with Amazon S3 managed keys (SSE-S3)"

- For sensitive data, consider "Server-side encryption with AWS KMS keys (SSE-KMS)"

- Enable "Bucket Key" to reduce KMS costs (if using SSE-KMS)

- Click "Save changes"

Screenshot: S3 Default Encryption configuration

SSE-S3 is sufficient for most SMBs; SSE-KMS provides additional control

SSE-S3 is sufficient for most SMBs; SSE-KMS provides additional control

2.2 Choose the Right Encryption Method

- SSE-S3 (Recommended for most SMBs): No additional cost, automatic key management

- SSE-KMS: Additional key control, audit trails, $0.03 per 10,000 requests

- SSE-C: Customer-managed keys, significant operational overhead

2.3 Enforce Encryption with Bucket Policy

Prevent unencrypted uploads by adding this policy:

Configure transitions based on data access patterns and compliance requirements

Configure transitions based on data access patterns and compliance requirements